Summary

In this work, I develop and compare two autoregressive image generators—VAR and VAR‑CLIP—implement Monte Carlo Dropout at inference to obtain pixel‑wise uncertainty maps, and analyze how uncertainty correlates with generation fidelity, semantic regions, and prompt design. VAR leverages coarse‑to‑fine “next‑scale prediction” to achieve state‑of‑the‑art FID and Inception Score12, while VAR‑CLIP incorporates CLIP text embeddings for rich semantic conditioning34. Enabling dropout during sampling reveals a clear fidelity–confidence trade‑off: as DropPath rate increases, FID worsens and average σ rises52. Masking with SAM shows lower uncertainty over foreground objects than backgrounds6, and prompt complexity experiments uncover a non‑linear peak in σ at moderate ambiguity7. Detailed captions both improve image quality and reduce uncertainty, demonstrating the importance of prompt clarity8.

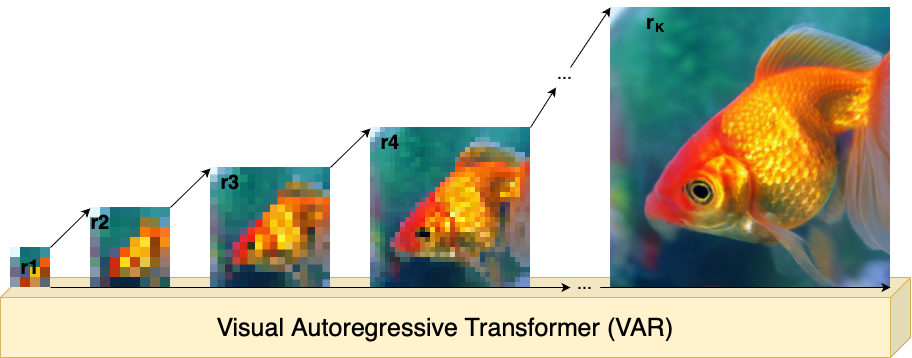

VAR

I implement VAR by framing image synthesis as a multi‑scale prediction task, where at each resolution the model predicts the next finer-scale image tokens in an autoregressive fashion1. This coarse‑to‑fine transformer architecture allows VAR to learn visual distributions efficiently and surpass diffusion models on ImageNet 256×256 benchmarks19.

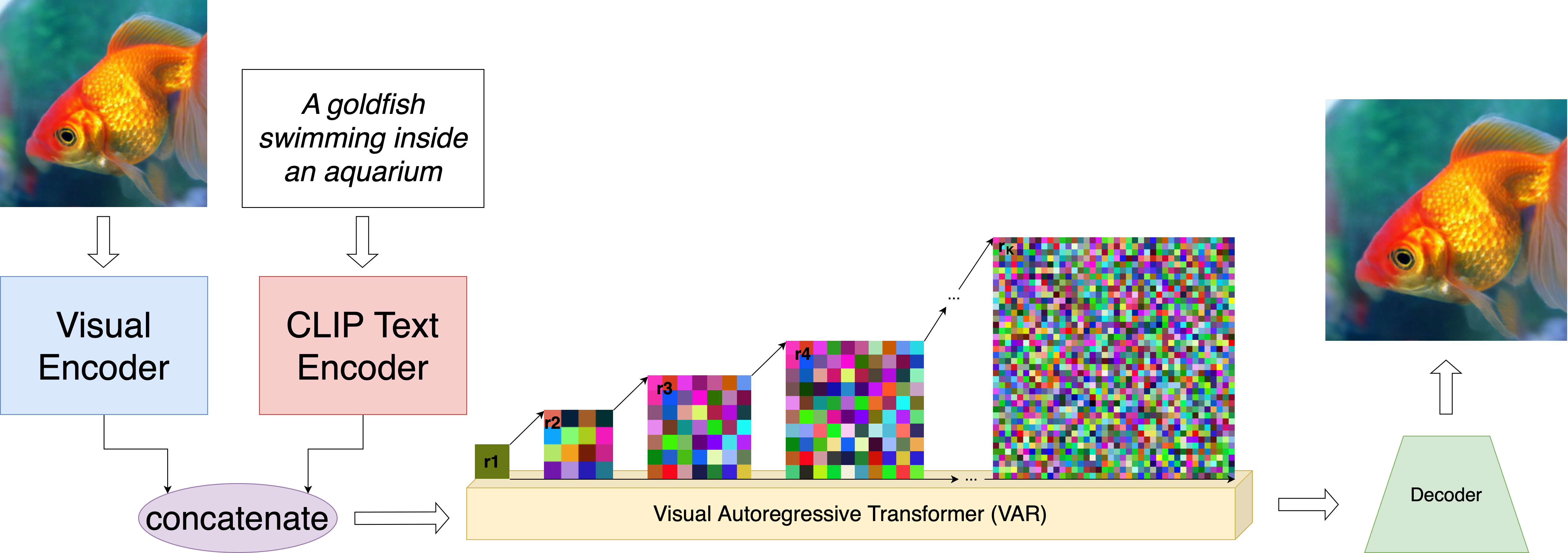

VAR‑CLIP

Building on VAR’s backbone, VAR‑CLIP feeds CLIP text embeddings as conditioning context at every scale. Captions are encoded by a pre‑trained CLIP model into a fixed‑length vector, which guides token prediction to yield semantically aligned outputs across diverse prompts3410.

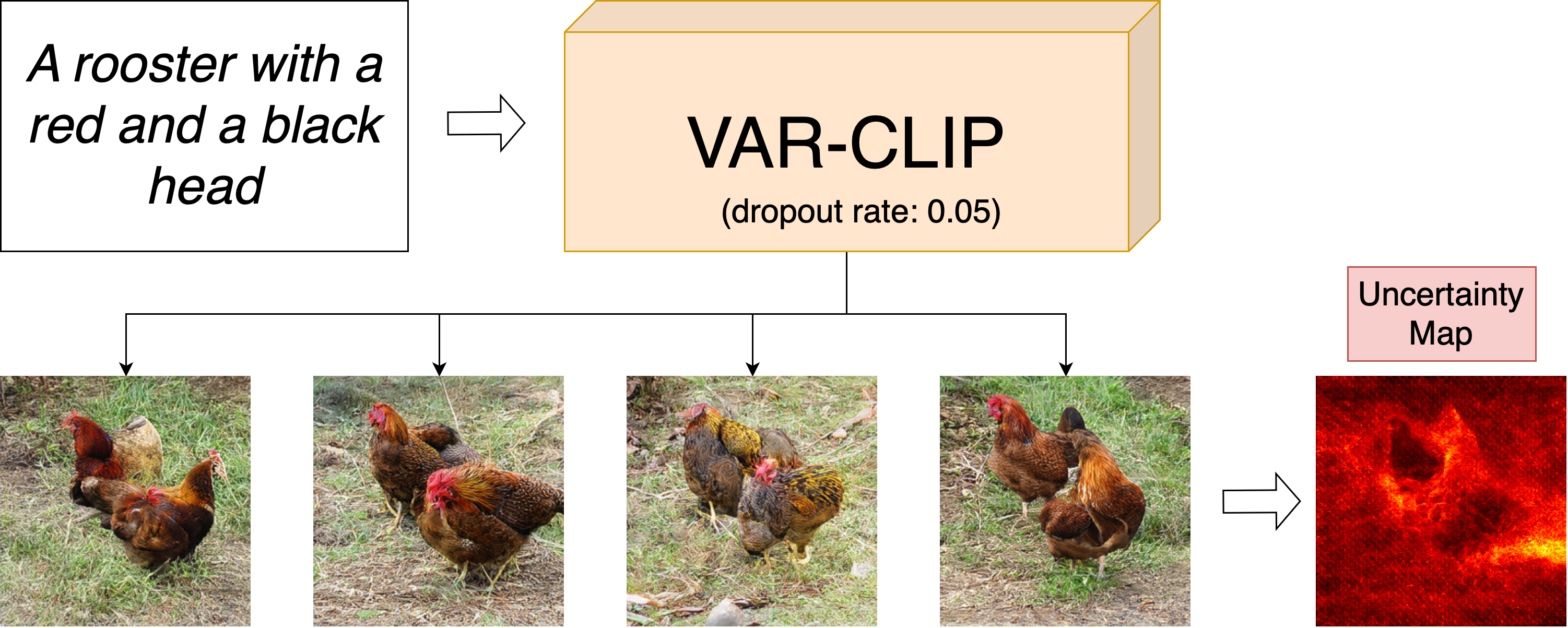

MC Dropout

To approximate Bayesian inference, I activate dropout layers during inference and perform multiple forward passes per prompt. Aggregating 50 samples per seed, I compute pixel‑wise standard deviations (σ) as epistemic uncertainty estimates without modifying training regimes5.

Experiments with Tables and Images

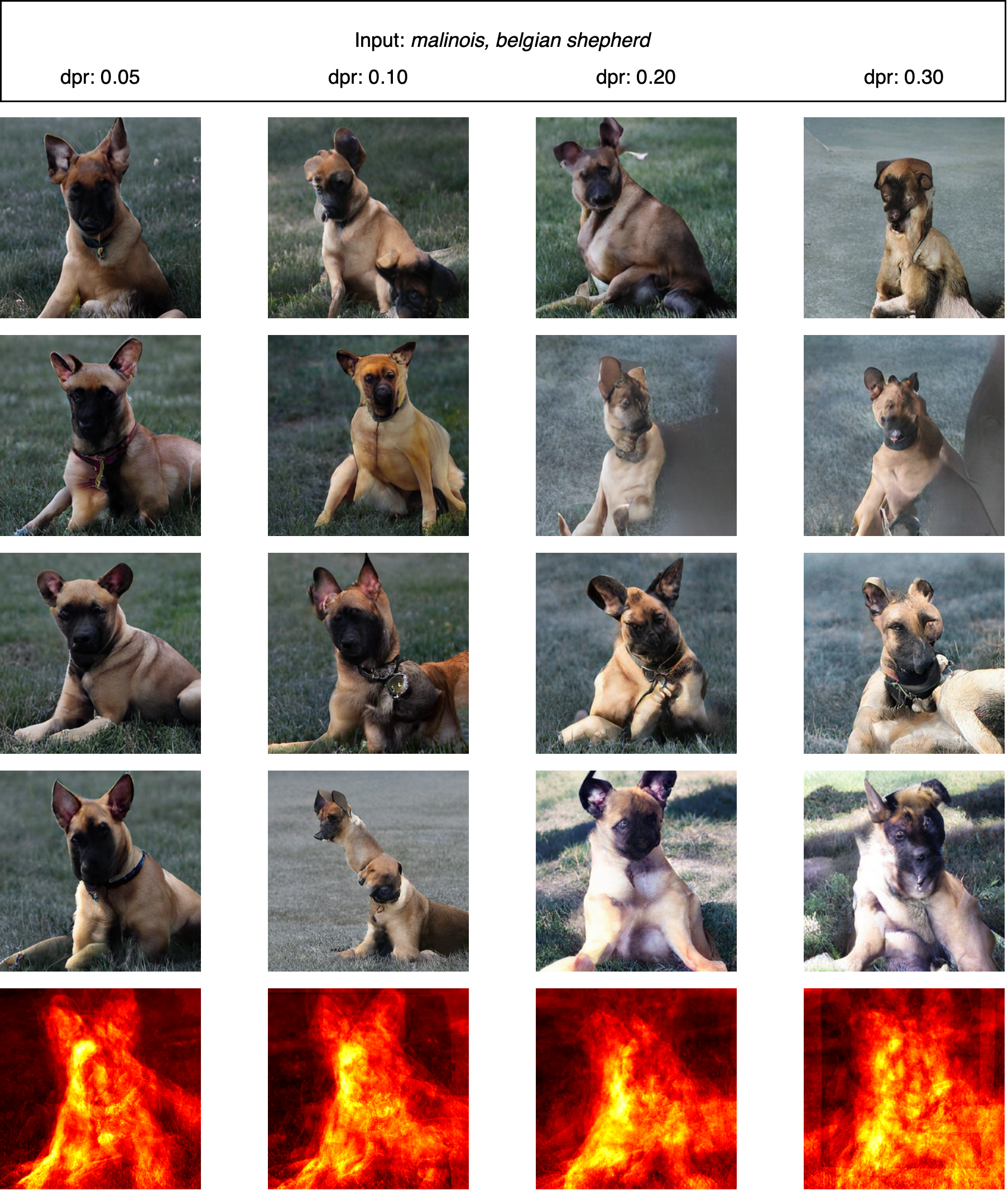

Performance–Uncertainty Trade‑off

For the prompt “Belgian shepherd,” I sweep DropPath rates and record FID, Inception Score (IS), Central Moment Discrepancy (CMMD), and average σ:

| DropPath Rate | FID ↓ | IS ↑ | CMMD ↓ | σ |

|---|---|---|---|---|

| 0.05 | 18.54 | 78.32 ± 1.73 | 4.357 | 0.1927 |

| 0.10 | 18.98 | 73.11 ± 1.31 | 4.384 | 0.2107 |

| 0.20 | 22.68 | 64.13 ± 1.91 | 4.536 | 0.2198 |

| 0.30 | 30.57 | 51.82 ± 0.91 | 4.833 | 0.2259 |

As dropout increases, generation quality degrades (higher FID, lower IS) while uncertainty rises, illustrating the fidelity–confidence trade‑off28.

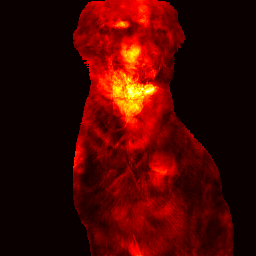

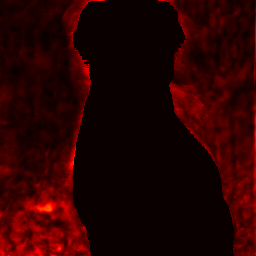

Region‑Specific Confidence

Using SAM to segment “Golden retriever” images, I compare foreground and background uncertainty:

| Region | σ |

|---|---|

| Object | 0.1975 |

| Background | 0.1993 |

Foreground regions exhibit slightly lower σ, indicating higher confidence in semantically salient areas6.

Semantic Entanglement

I generate images for prompts with one, two, or three concepts and measure uncertainty:

| Concepts | FID ↓ | IS ↑ | CMMD ↓ | σ |

|---|---|---|---|---|

| 1 | 18.54 | 78.32 ± 1.73 | 4.357 | 0.1927 |

| 2 | 35.96 | 31.53 ± 0.52 | 4.381 | 0.1955 |

| 3 | 47.99 | 21.16 ± 0.43 | 4.528 | 0.1924 |

Uncertainty peaks at two concepts, reflecting maximum semantic ambiguity before dilution7.

Prompt Descriptiveness

Comparing a simple caption against a richly descriptive one:

| Prompt Type | FID ↓ | IS ↑ | CMMD ↓ | σ |

|---|---|---|---|---|

| Simple | 18.54 | 78.32 ± 1.73 | 4.357 | 0.1927 |

| Complex | 15.14 | 103.49 ± 1.55 | 3.785 | 0.1794 |

Descriptive prompts both boost fidelity and reduce uncertainty, underscoring the value of unambiguous guidance8.

Conclusions

- VAR vs VAR‑CLIP: VAR excels in raw fidelity, while VAR‑CLIP enhances semantic alignment at the cost of higher baseline uncertainty13.

- MC Dropout: A practical Bayesian approximation that reveals how dropout rates shape the fidelity–confidence trade‑off5.

- Semantic Relevance: SAM‑based masking confirms greater confidence in salient regions6.

- Prompt Design: Both complexity and descriptiveness critically influence uncertainty, with moderate ambiguity peaking σ and detailed captions minimizing it87.

References

Footnotes

-

Keyu Tian, Yi Jiang, Zehuan Yuan, Bingyue Peng, Liwei Wang. “Visual Autoregressive Modeling: Scalable Image Generation via Next-Scale Prediction.” NeurIPS 2024 [arXiv:2404.02905]. ↩ ↩2 ↩3 ↩4

-

Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, Sepp Hochreiter. “GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium.” NeurIPS 2017 [arXiv:1706.08500]. ↩ ↩2 ↩3

-

Qian Zhang, Xiangzi Dai, Ninghua Yang, Xiang An, Ziyong Feng, Xingyu Ren. “VAR‑CLIP: Text-to-Image Generator with Visual Auto-Regressive Modeling.” arXiv:2408.01181 (2024). ↩ ↩2 ↩3

-

Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, Gretchen Krueger, Ilya Sutskever. “Learning Transferable Visual Models From Natural Language Supervision.” arXiv:2103.00020 (2021). ↩ ↩2

-

Yarin Gal, Zoubin Ghahramani. “Dropout as a Bayesian Approximation: Representing Model Uncertainty in Deep Learning.” ICML 2016 [arXiv:1506.02142]. ↩ ↩2 ↩3

-

Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alexander C. Berg, Wan‑Yen Lo, Piotr Dollár, Ross Girshick. “Segment Anything.” ICCV 2023 [arXiv:2304.02643]. ↩ ↩2 ↩3

-

Werner Zellinger, Thomas Grubinger, Edwin Lughofer, Thomas Natschläger, Susanne Saminger-Platz. “Central Moment Discrepancy for Domain-Invariant Representation Learning.” ICLR 2017 [arXiv:1702.08811]. ↩ ↩2 ↩3

-

Tim Salimans, Ian Goodfellow, Wojciech Zaremba, Vicki Cheung, Alec Radford, Xi Chen. “Improved Techniques for Training GANs.” NIPS 2016 [arXiv:1606.03498]. ↩ ↩2 ↩3 ↩4

-

FoundationVision. “VAR GitHub Repository.” GitHub (2024), https://github.com/FoundationVision/VAR. ↩

-

daixiangzi. “VAR‑CLIP GitHub Repository.” GitHub (2024), https://github.com/daixiangzi/VAR-CLIP. ↩